What Are Website Crawlers?

A web crawler is a bot that visits and processes webpages to understand their content.

They go by many names, like:

- Crawler

- Bot

- Spider

- Spiderbot

Search engines use crawlers to discover and categorize webpages. And website owners can use crawler tools—such as backlink crawlers or technical audit bots—to monitor site performance, evaluate competitors, and improve SEO.

How Do Web Crawlers Work?

Web crawlers scan links, code, and content to gather information about a site.

As a website owner, you can use that information to improve your SEO strategy.

Links

Links show crawlers how webpages connect.

For example, if Page A links to Page B, the crawler follows the link and processes Page B.

This is why internal linking (linking between your own pages) is important for SEO.

Crawlers can also detect backlinks (links from other websites). Backlinks can improve your SEO. Crawler tools can help you identify both your backlinks and your competitors’ backlinks.

Code

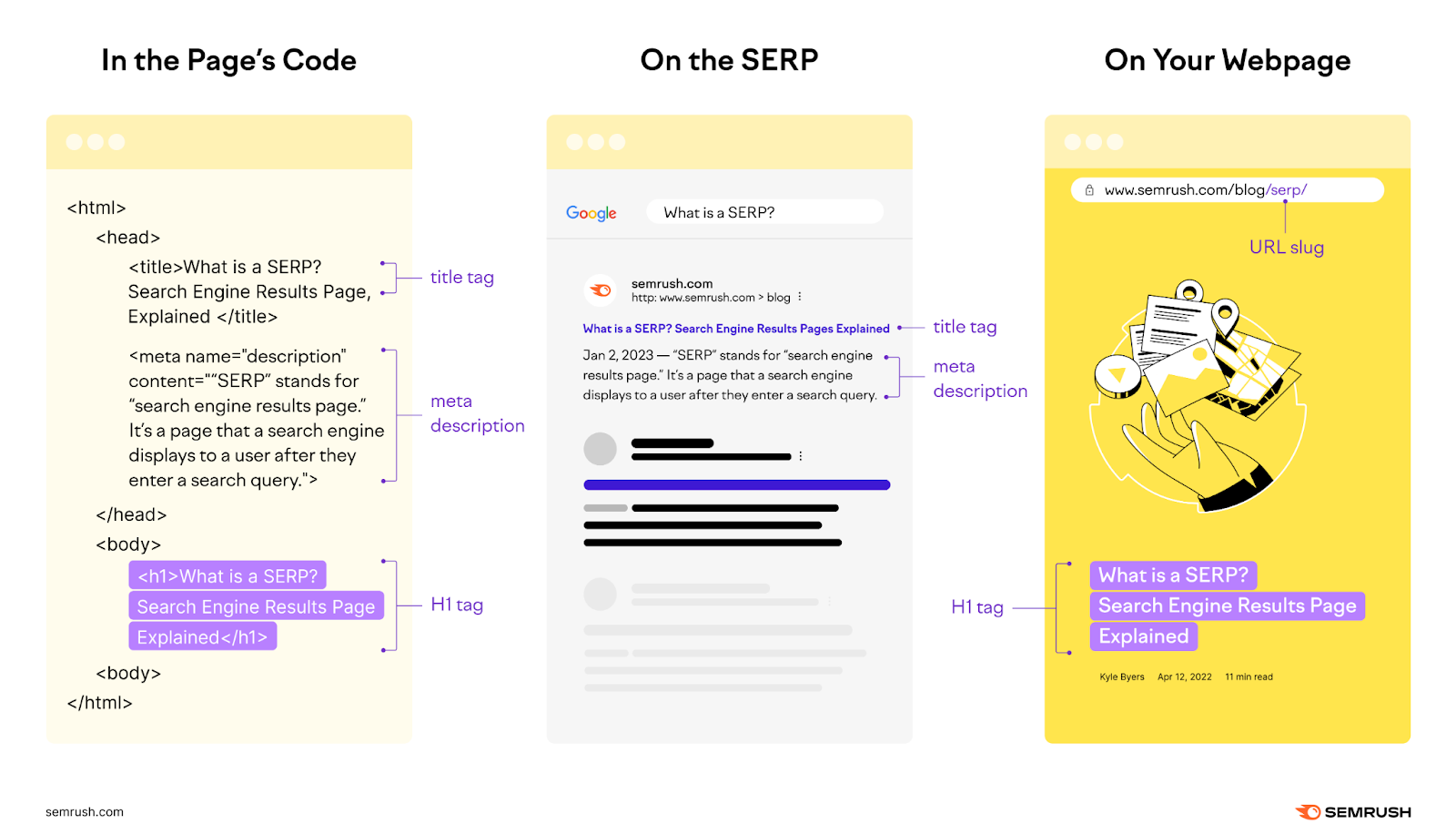

HTML elements like title tags, meta descriptions, and H1 tags signal a page’s topic to search engines and can help with rankings.

Here’s how different HTML elements can look in a webpage's code, on a search engine results page (SERP), and on the live webpage.

Crawling your site’s code can alert you to any pages with missing or broken HTML elements. Fixing these errors can improve your site’s SEO. And give search engines better context about each page to help you rank higher.

Content

Crawlers scan text to understand a page’s topic and determine which queries it should rank for.

Comparing your content with top-ranking pages highlights keyword gaps. And optimizing your content with what search engines favor can increase your chances of ranking higher.

How to Improve Your SEO with Web Crawler Tools

Crawler tools show you how search engines view your site, which helps you spot issues and uncover opportunities to improve your SEO.

Here are six useful crawler tools:

Backlink Crawler Tools

| Bing Webmaster Tools | Semrush Backlinks Analytics | |

| What It Is | A free tool to check backlinks and compare with up to two competitors | A tool for in-depth backlink data |

| Who It’s For | Users who want a quick snapshot of a site’s backlink profile | Those who want in-depth metrics on backlink strength, distribution, and competitor opportunities |

| What It Does Well |

|

|

Crawling your backlinks and your competitors’ helps you identify potential link opportunities so you can build a stronger backlink profile and potentially improve your rankings.

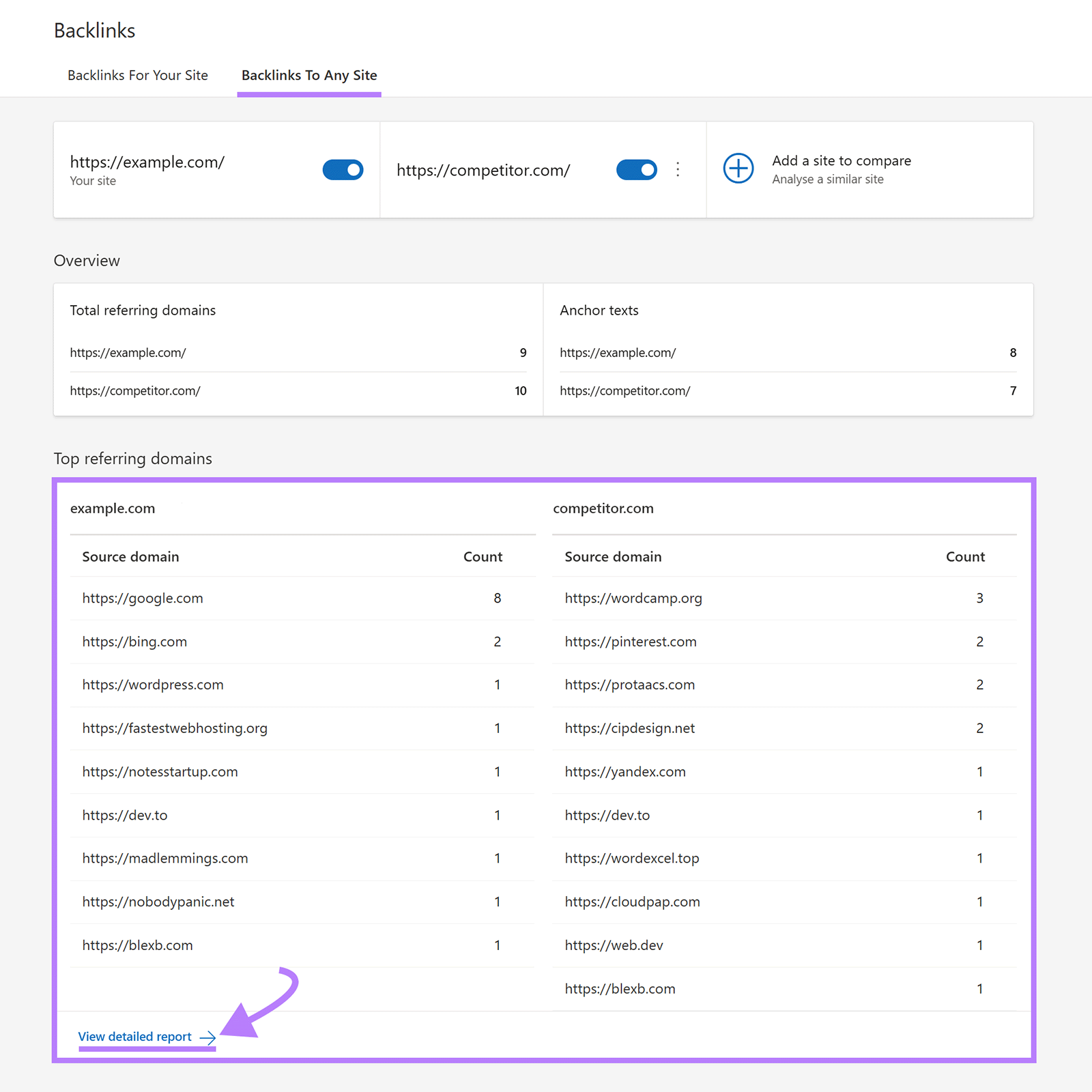

Bing Webmaster Tools is a free tool you can use to view high-level backlink data.

Open Webmaster Tools (or create your account).

Click “Backlinks” to see a list of your site’s backlinks. And click “Backlinks To Any Site” to compare your site with your competitors. Review the “Top referring domains” report and click “View detailed report” to see the full list.

For more detailed backlink data (like information on a backlink’s strength), use Semrush’s Backlinks.

Open the tool and enter the domain you’d like to view backlinks for and click “Analyze.”

A report will load that shows you:

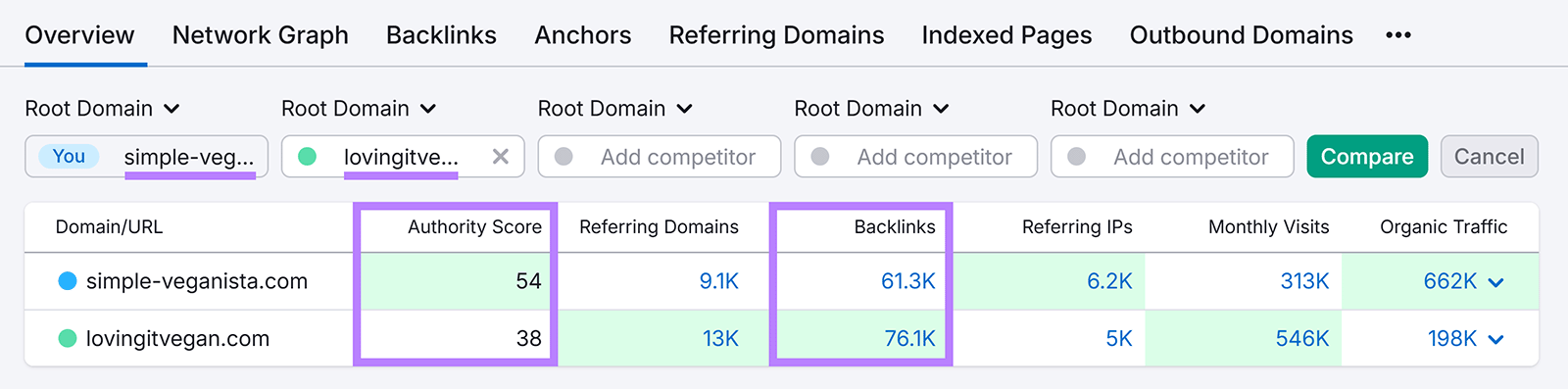

- Authority Score: A metric that measures the potential strength of your domain

- Referring Domains: The total number of domains pointing to a site

- Backlinks: The total number of backlinks pointing to a site

If you click into the “Backlinks” tab you’ll see a list of all referring backlinks. And clicking “Best” shows a list of the site’s strongest backlinks.

Consider trying to get backlinks from your competitors’ best backlink sources. Which might help you build your site’s authority and outrank them in the SERPs.

Here’s an example of two recipe sites. Both sites have a comparable number of backlinks. But The Simple Veganista has a higher authority score.

And when we review the authority distribution for both sites, we can see that The Simple Veganista (left) has more authoritative backlinks overall compared to Loving It Vegan (right).

You might notice something similar when using a backlink crawler for your site. Which could mean you need to work on building quality backlinks from authoritative sources.

Site Audit Crawler Tools

| Screaming Frog SEO Spider | Semrush Site Audit | |

| What It Is | A desktop crawling tool that reviews on-page and technical errors | A tool that crawls your site and identifies technical and SEO issues |

| Who It’s For | SEOs and developers with technical knowledge | Marketers and site owners who want automated error detection plus “why and how to fix” guidance |

| What It Does Well |

|

|

Site audit crawling tools can review your website to look for on-page and technical SEO errors and fixing them can improve your site’s SEO.

Screaming Frog SEO Spider lets you crawl 500 pages for free. Or you can buy a paid plan for more.

After downloading the tool, enter your site’s URL and click “Start.” The tool will crawl your site. When done, it will show issues, warnings, and opportunities that you can fix to improve your SEO.

Click into each issue to see the affected URLs to see what you need to fix.

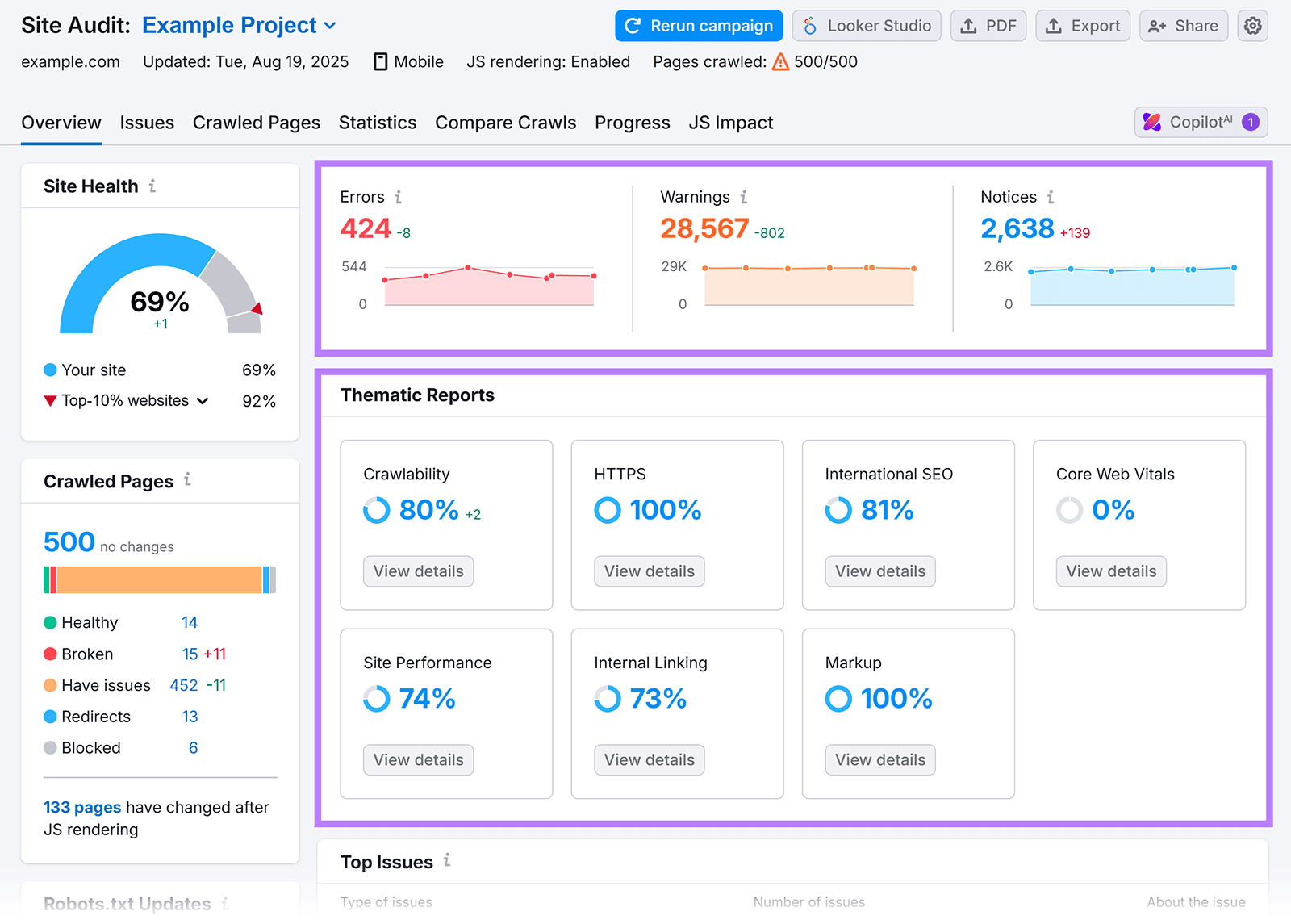

Semrush’s Site Audit is another tool to crawl your site for errors. On top of showing you the errors, the tool tells you how to fix them. Which can help you fix issues faster.

Here’s how:

Run an audit on your own site by configuring Site Audit.

Once configured, open your audit. You’ll see an overview of errors, warnings, and notices. Along with thematic reports and other site information.

Click the “Issues” tab for a list of issues to fix. Prioritize fixing errors first. Then move to warnings and notices. Click “Why and how to fix it” for tips. And click the number beside the issue to open a report that details which areas of your site the issue affects.

You can also see which issues are affecting different areas of your SEO efforts. Such as issues affecting your rankings in AI search.

Work your way through the list and fix each issue. Re-run the audit after fixing the issues to make sure you fixed them and they no longer remain issues.

Content Crawler Tools

| Clearscope | Semrush Content Optimizer | |

| What It Is | A content analysis tool that recommends target keywords, optimal word count, readability level, and provides an outline of competing content (H2s, H3s, etc.). | A tool within Semrush’s Content Toolkit that crawls top-ranking pages for a given keyword and scores your draft against them, with actionable optimization tips. |

| Who It’s For | Content writers who need data-driven guidance for new or existing content. | Writers and marketers who want to audit, grade, and improve existing or drafted content. |

| What It Does Well |

|

|

Content crawlers analyze site content—such as blog posts—for factors like keyword usage and word count to help you identify what your content needs to outrank competitors in the SERPs.

Clearscope is a content crawler that tells you which terms your content should include. Along with how many words you should write. And what readability level to aim for.

Plus it gives you an outline of competing content (such as the H2s, H3s, and so on). To help you format your own article.

Semrush’s Content Optimizer also crawls ranking content and gives you ideas on how to write and improve your content. So that it outranks the competition.

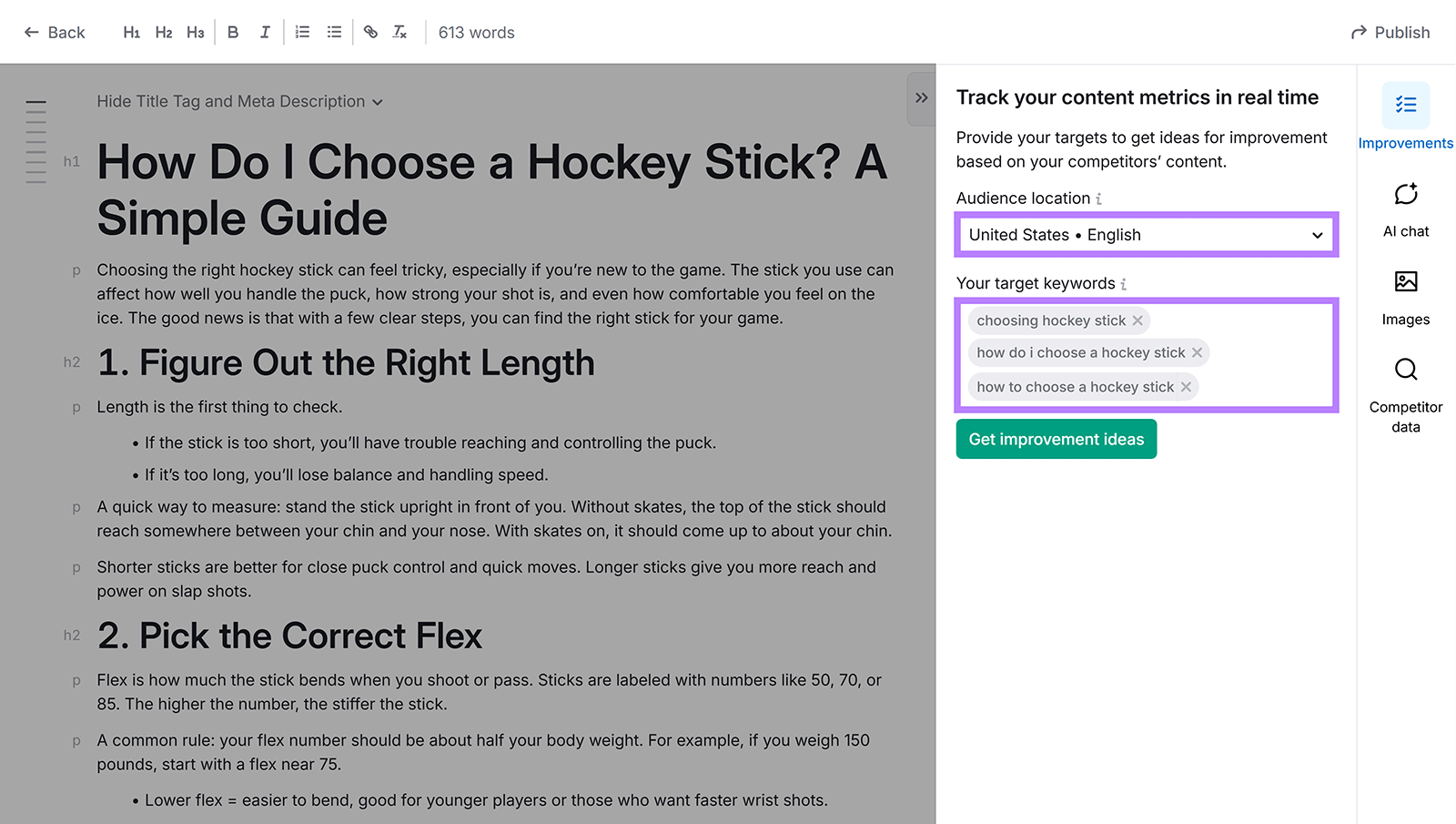

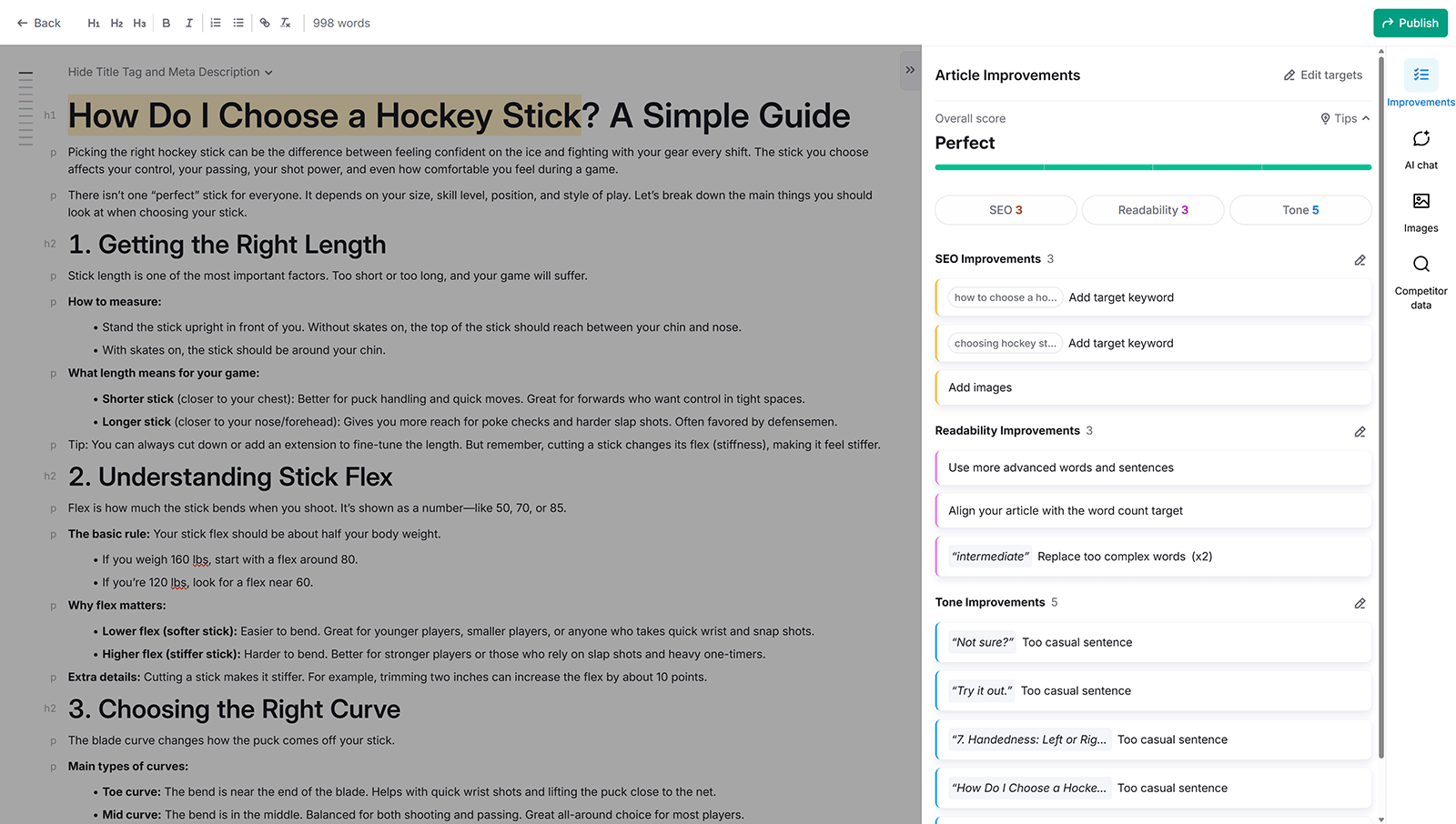

I used Content Optimizer to review two articles for the keywords “how to choose a hockey stick” and “how do I choose a hockey stick.”

The first article ranks near the top of page one. While the other article is outside the top 10 results. The higher-ranking article scores “Good.” And has room for improvements like adding images. Following these tips might help this piece maintain its rankings.

While the second one ranks “Mediocre.” And could be improved to potentially rank higher.

Here’s how to use Content Optimizer for your own site.

Open the tool and enter your audience’s location and target keywords. You can either write your article from scratch in the editor or copy and paste an existing article to improve.

Then, the tool will review your content and grade it against factors like readability, word count, tone, and the use of related keywords. It also benchmarks your article against top-performing competitors.

And you can use the built-in AI to generate ideas if you get stuck.

.

How to Block Crawlers from Accessing Your Entire Website

Blocking crawlers from accessing your site can be useful if you want to keep an entire website private, such as a staging environment or site that’s not ready to launch.

Add this to your robots.txt file if you want to discourage bots from crawling your site:

User-agent: *

Disallow: /Where:

- User-agent: * means the rule applies to all bots

- Disallow: / tells bots not to crawl any pages on the site

This only stops compliant crawlers (like Google, Bing, or Semrush). Malicious or non-compliant bots may ignore these rules.

Blocking crawlers can prevent search engines from indexing your site. And can cause your site to disappear from the SERPs. Only use the above directive if you’re sure the site shouldn’t be visible or indexed.

Keep Crawling to Stay Ahead of the Competition

Keep an edge over the competition by regularly crawling your website and your rivals.

You can schedule automatic recrawls and reports with Site Audit. Or manually run future crawls to keep your website in top shape.

Try Semrush for free today.